During the second world war the US army would fly their cargo planes onto small remote islands in the Pacific. On these islands the US army had built runways, controls towers and radar to ensure a smooth operation and enable them to supply their war effort with food, water, ammunition and general goods.

Home

Flow - The essence of high performing engineering organisations

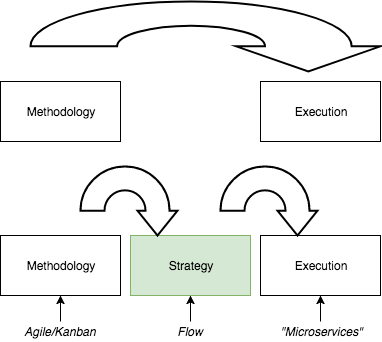

A focus on timelines results, ultimately, in longer cycle times. Instead if we shift our focus to managing queues we can emphasize and optimize flow and reduce cycle times and cost of delay

The Process Delusion

Speed wins in software development. It sounds wrong but it’s true. When people hear this they just can’t see why. They confuse speed with rushing or a reduction in quality. As a result of this fallacy they optimize for perceived quality. Adding process ensues.